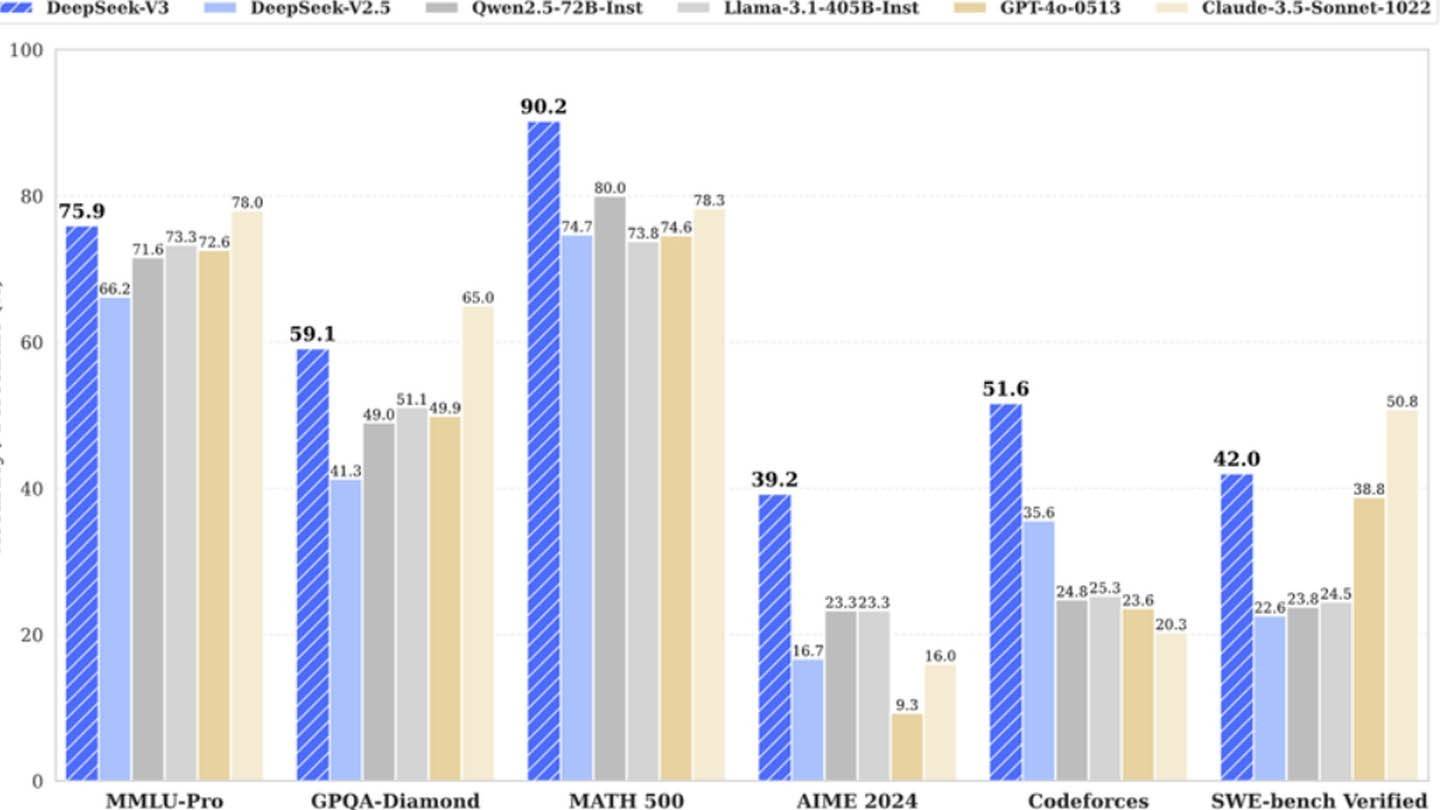

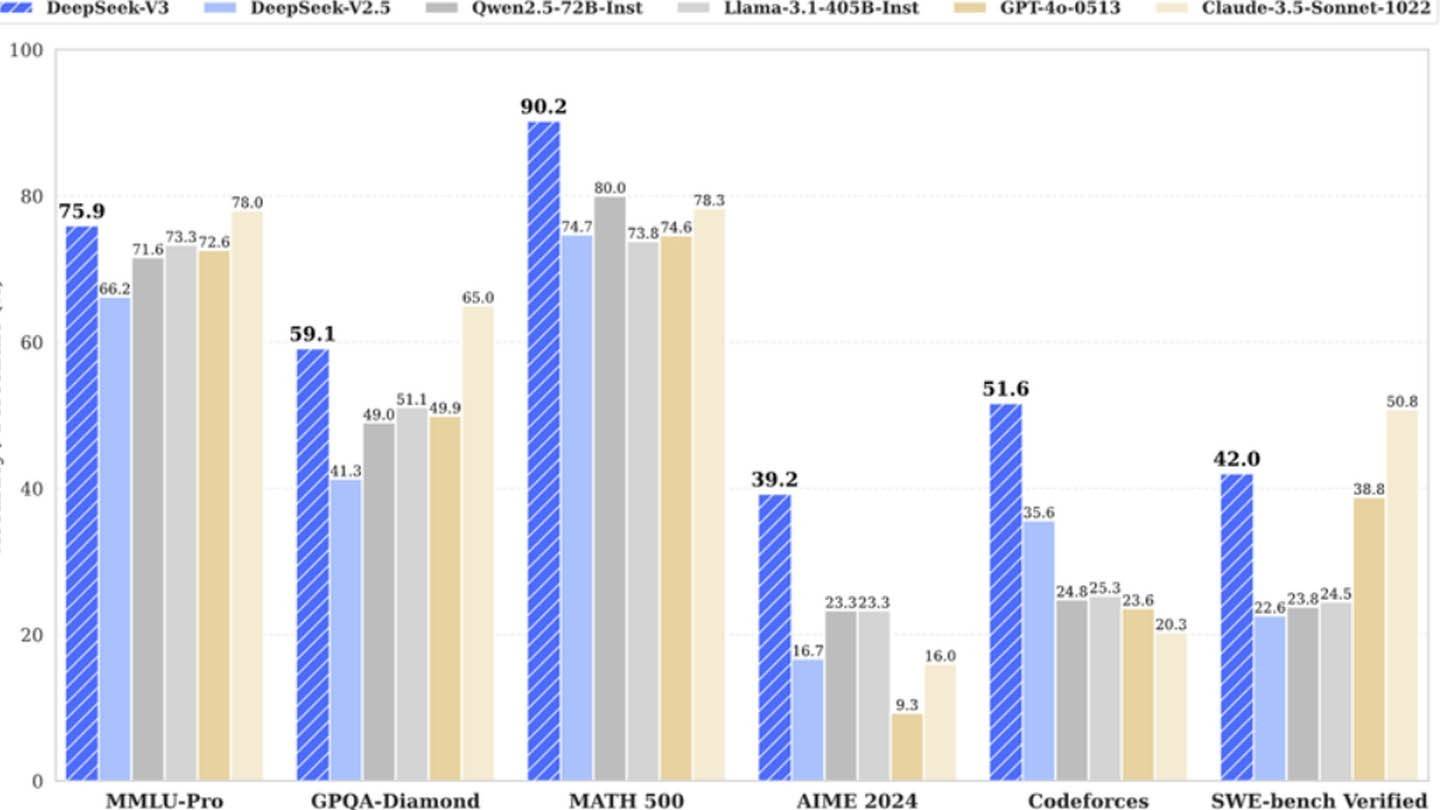

DeepSeek's surprisingly cost-effective AI model challenges industry giants. While initially claiming a mere $6 million training cost for its powerful DeepSeek V3 model, a closer look reveals a far more substantial investment.

DeepSeek's V3 model boasts innovative technologies: Multi-token Prediction (MTP), predicting multiple words simultaneously; Mixture of Experts (MoE), utilizing 256 neural networks for enhanced processing; and Multi-head Latent Attention (MLA), focusing on key sentence elements for improved accuracy.

Image: ensigame.com

Image: ensigame.com

However, SemiAnalysis uncovered DeepSeek's substantial infrastructure: approximately 50,000 Nvidia Hopper GPUs, including H800, H100, and H20 units, spread across multiple data centers. This represents a total server investment of roughly $1.6 billion and operational costs nearing $944 million.

Image: ensigame.com

Image: ensigame.com

DeepSeek, a subsidiary of High-Flyer, a Chinese hedge fund, owns its data centers, fostering control and rapid innovation. Its self-funded nature contributes to agility and swift decision-making. The company attracts top talent, with some researchers earning over $1.3 million annually, primarily from Chinese universities.

Image: ensigame.com

Image: ensigame.com

The initial $6 million figure only covers pre-training GPU usage, omitting research, refinement, data processing, and infrastructure. DeepSeek's actual AI development investment surpasses $500 million. Despite this, its lean structure allows for efficient innovation, unlike larger, more bureaucratic competitors.

Image: ensigame.com

Image: ensigame.com

While DeepSeek's success stems from substantial investment, technological advancements, and a skilled team, the "budget-friendly" narrative is misleading. Nevertheless, its costs remain significantly lower than competitors; for example, DeepSeek's R1 model cost $5 million, compared to ChatGPT4's $100 million. DeepSeek's example showcases a well-funded independent AI company successfully competing with established leaders, although the initial cost claims require careful interpretation.

Image: ensigame.com

Image: ensigame.com Image: ensigame.com

Image: ensigame.com Image: ensigame.com

Image: ensigame.com Image: ensigame.com

Image: ensigame.com LATEST ARTICLES

LATEST ARTICLES